Our Team’s Quest to Test AI’s Analysis Capabilities

The AI frenzy sweeping the insight industry sent researchers on an emotional rollercoaster this year— swinging from excitement and curiosity to feeling downright overwhelmed! We dipped our toes into broad language models like Chat GPT and specialized AI offerings tailored to qualitative research needs. Our team launched an initiative to test AI tools to determine the appropriate balance between artificial intelligence and human expertise. With so many new capabilities on the market, our team was eager to put them through the paces of our research process, especially a key deliverable, the Insight Summary. This 5–7-page report synthesizes the overall themes of a project, including participant verbatims and actionable next steps for our clients.

We asked ourselves, “Is there an AI tool that can generate an Insight Summary, comparable to the quality of what we produce, that would be meaningful to our clients?”

Our annual Pro Bono project offered an ideal opportunity to evaluate AI tools. First, we drafted a human-authored Insight Summary to serve as a benchmark to compare to machine-generated versions. We then input data into multiple AI platforms to give the tools a synthesis starting point. Some platforms—typically generalist LLMs — were also able to include additional contextual documents (e.g., background documents, client information, participant segmentation, desired output structure).

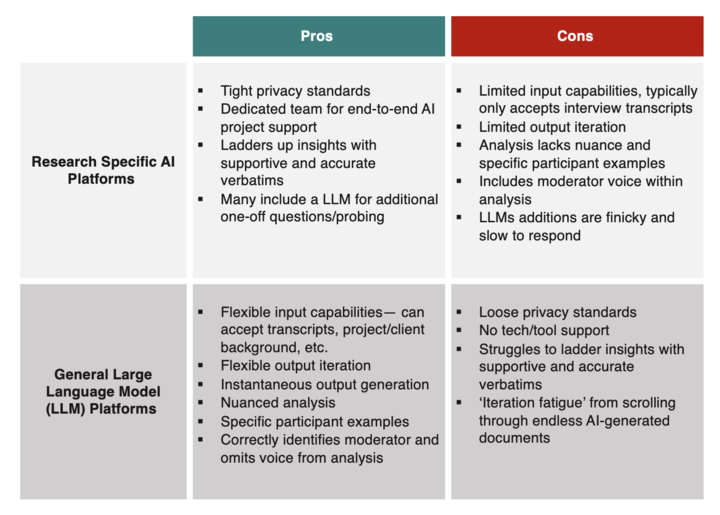

Both research-focused AI tools and general LLMs delivered relatively accurate analysis results. However, we discovered some drawbacks which researchers should be aware of before leaning into AI tools too heavily.

Ultimately, a large language model produced the highest quality insights, generated a ~2 page report with detailed examples and a robust section outlining actionable next steps tailored to the client’s goals of leveraging the research for website and messaging optimization. Creating a summary involved roughly 3 hours of refining and iteration with the tool to produce a final copy. We made a few minor edits to this example AI-generated Insight Summary and sent it to our clients for their feedback.

Overall, brands were intrigued by an AI-generated Insight Summary, especially for quick-term or prelim deliverables when working on a tight timeline. However, they still want KNow’s full-service (and human brain-fueled!) analysis to convey the depth of insights to inform key business decisions. We’ll take that as win and a reassurance AI won’t make researchers obsolete just yet!

“My initial thought is it’s good for a prelim deliverable that gives a quicker theme overview than a normal process might take. However, I hesitate to lean too heavily on something like this.”

—Insights Strategy Leader

“This would be good for a topline summary, or a quick turn deliverable for some IDIs when you are short on time and full report isn’t needed or possible.” —Market Research Manager

So what’s next? Our team has committed to continuing to do what we do best—research! With the AI landscape rapidly evolving, we will keep testing AI tools to complement our practices. We’ll continue to explore AI capabilities and welcome the opportunity to evaluate any new AI capabilities, whether they be in beta or released form! Please reach out to admin@knowresearch.com for all inquiries.